MuPT:

Symbolic Music Generative Pre-trained Transformer

Abstract

MuPT is a series of pre-trained models for symbolic music generation. It was trained on a large-scale dataset of symbolic music, including millions of monophonic and polyphonic pieces from different genres and styles. The models are trained with the LLama2 architecture, and can be further used for downstream music generation tasks such as melody generation, accompaniment generation, and multi-track music generation.

Authors

Corresponding Authors

Wenhu Chen1,2,6, Jie Fu1,3, Ge Zhang1,2,6

Equal Technical Contributions

Xingwei Qu1,3,4, Yuelin Bai5, Yinghao Ma1,7,Ge Zhang1,2,6

Additional Authors

Ziya Zhou3, Ka Man Lo3, Jiaheng Liu1, Ruibin Yuan1,3, Lejun Min8, Xueling Liu5, Tianyu Zhang9, Xinrun Du1, Shuyue Guo1, Yiming Liang10, Yizhi LI1,4, Shangda Wu11, Junting Zhou12, Tianyu Zheng1, Ziyang Ma13, Fengze Han1, Wei Xue3, Gus Xia8, Emmanouil Benetos7, Xiang Yue1, Chenghua Lin4, Xu Tan14,Stephen W. Huang15

M-A-P1, University of Waterloo2, HKUST3, University of Manchester4, Shenzhen Institute of Advanced Technology,CAS5, Vector Institue6, QMUL7, MBZUAI8, MILA9, Institute of Automation, CAS10, Central Conservatory of Music11, PKU12, SJTU13, MSRA14, harmony.ai15

SMS Law

MuPT Model Checkpoints HF-Link

Examples

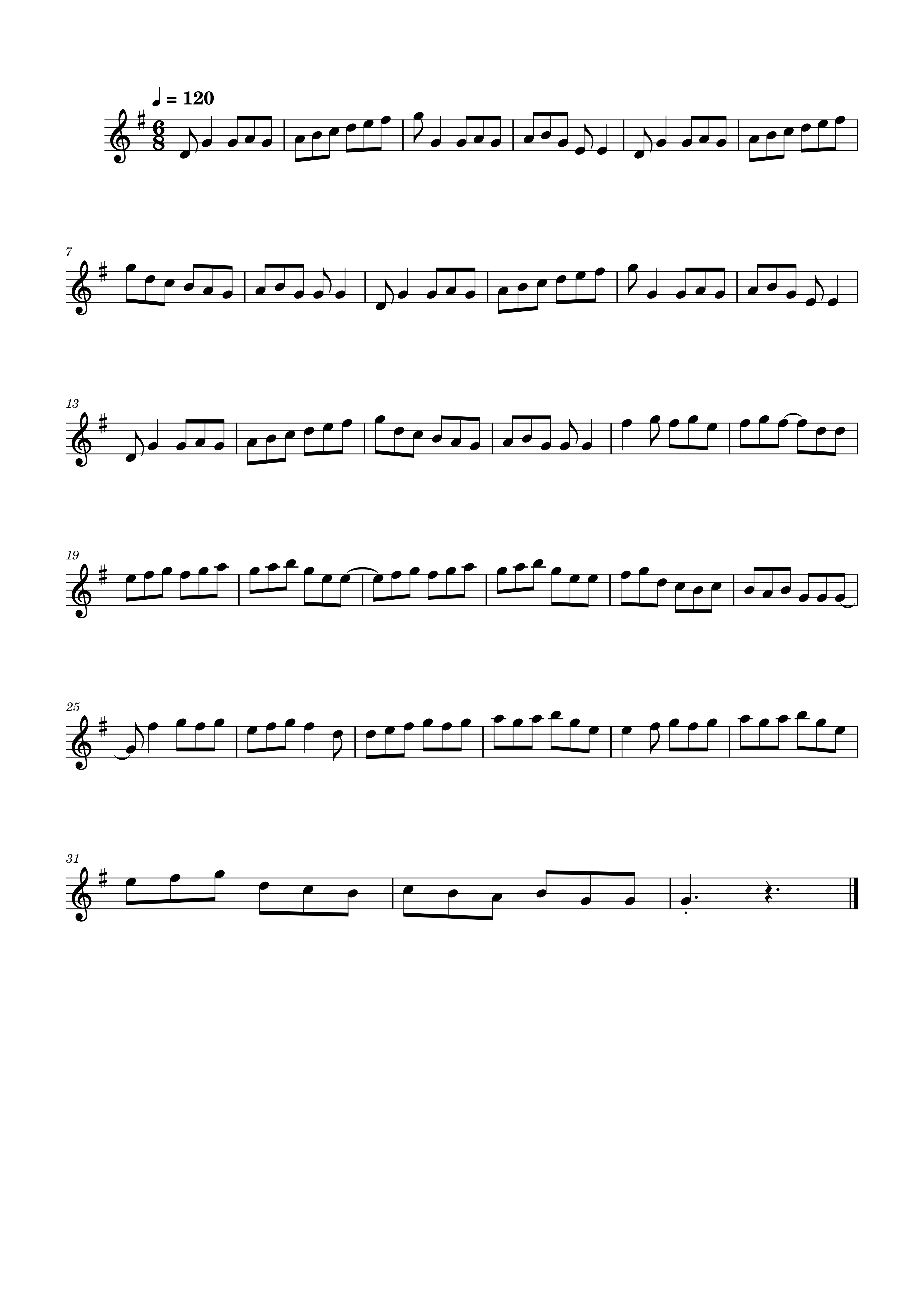

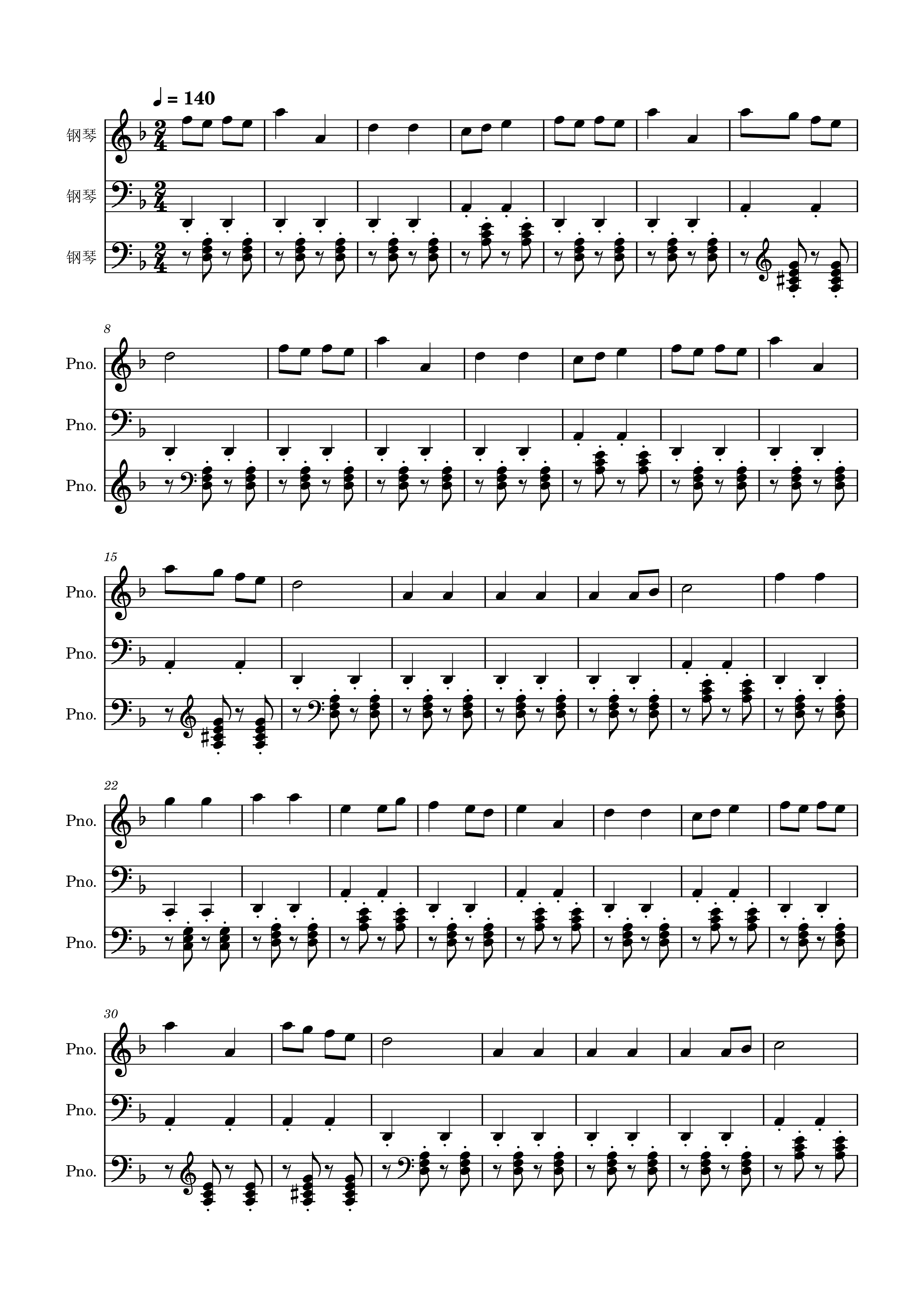

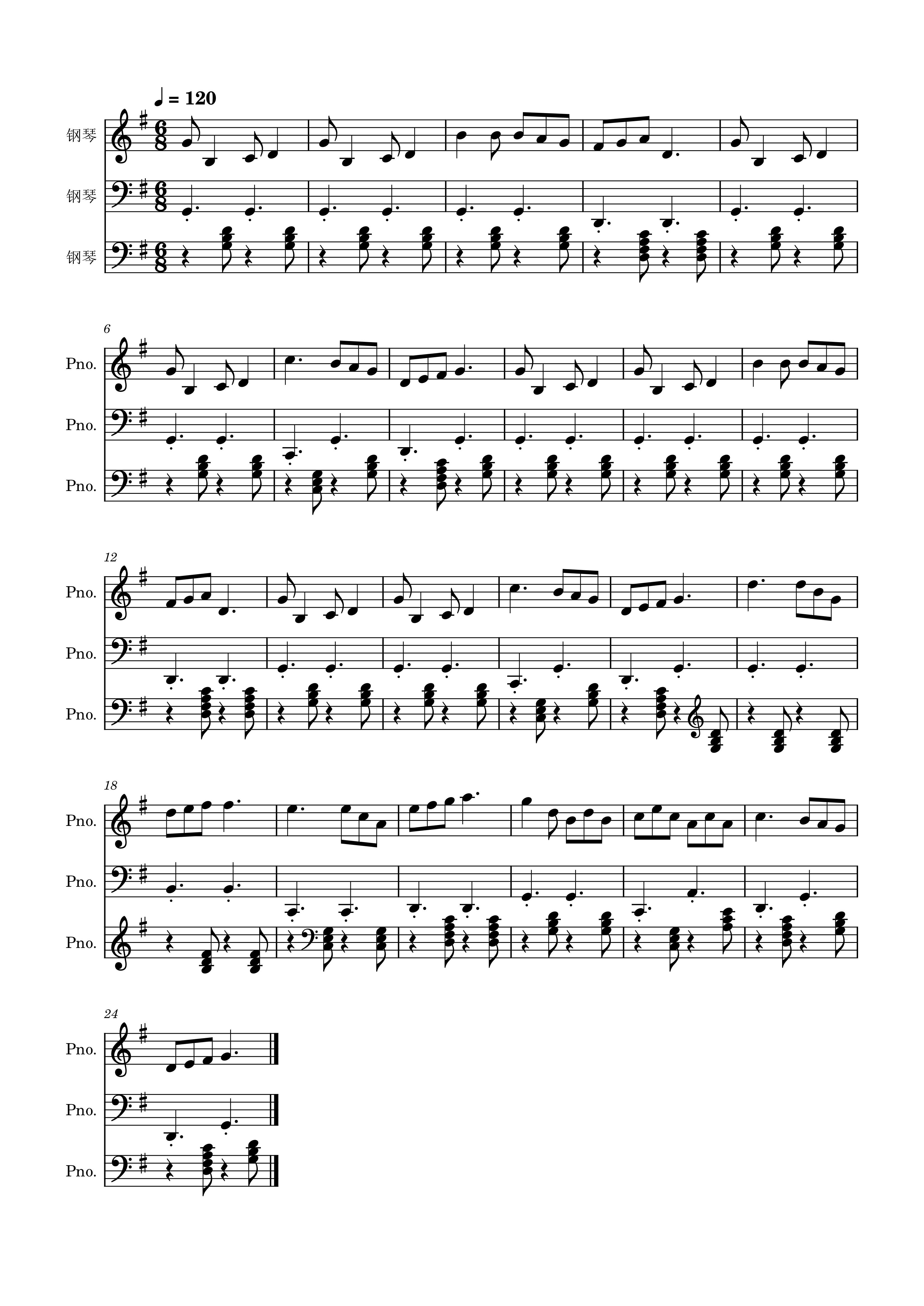

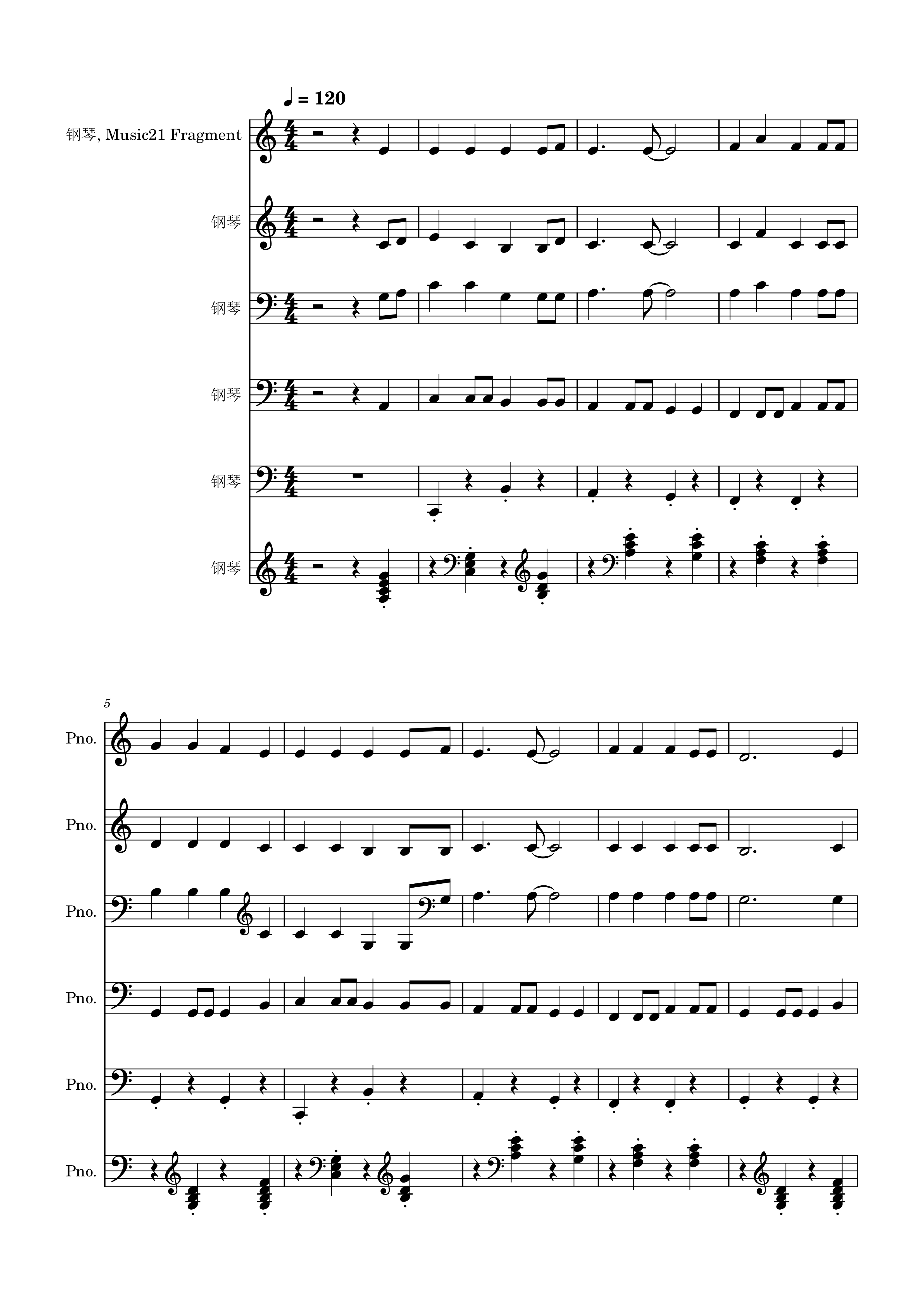

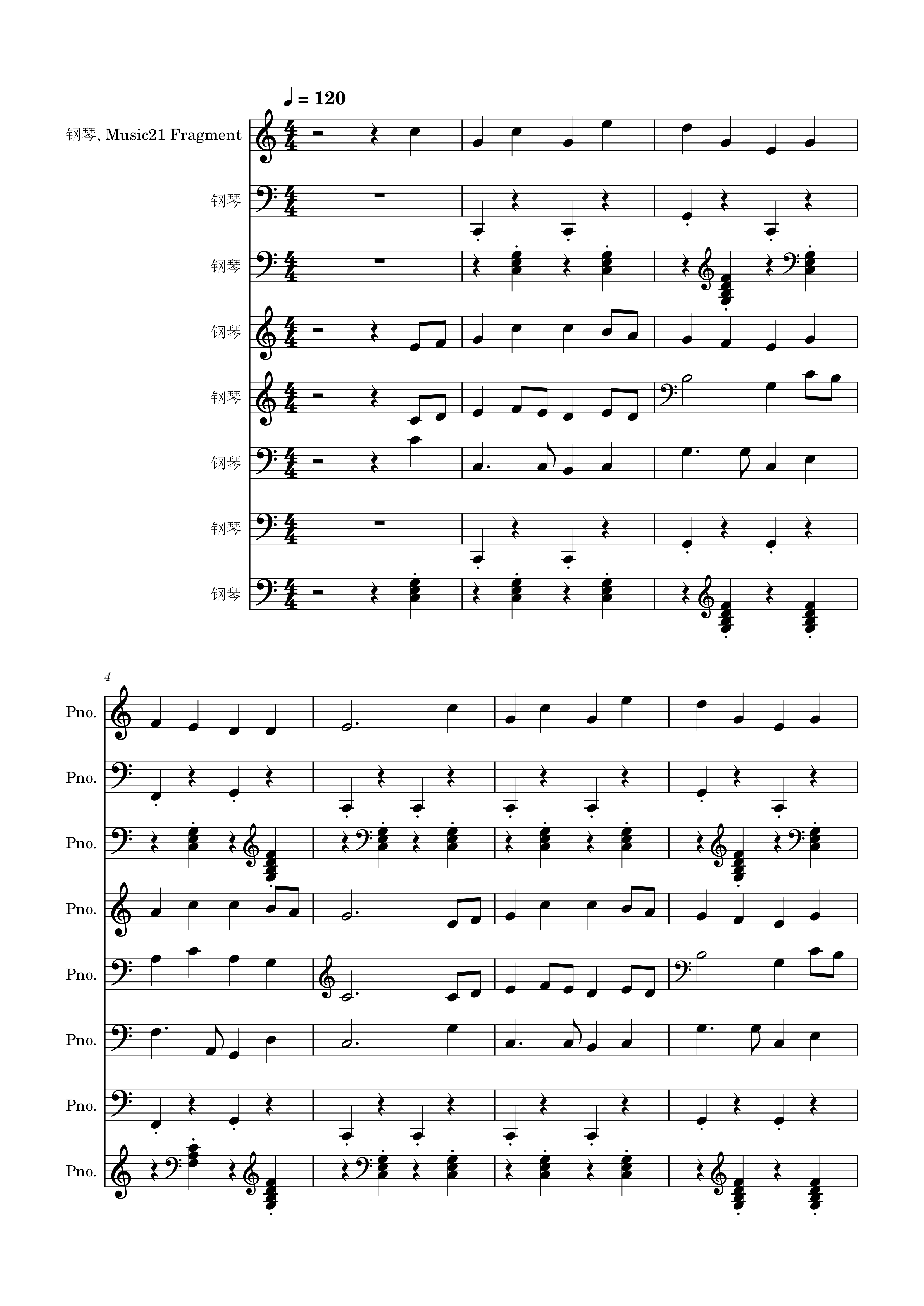

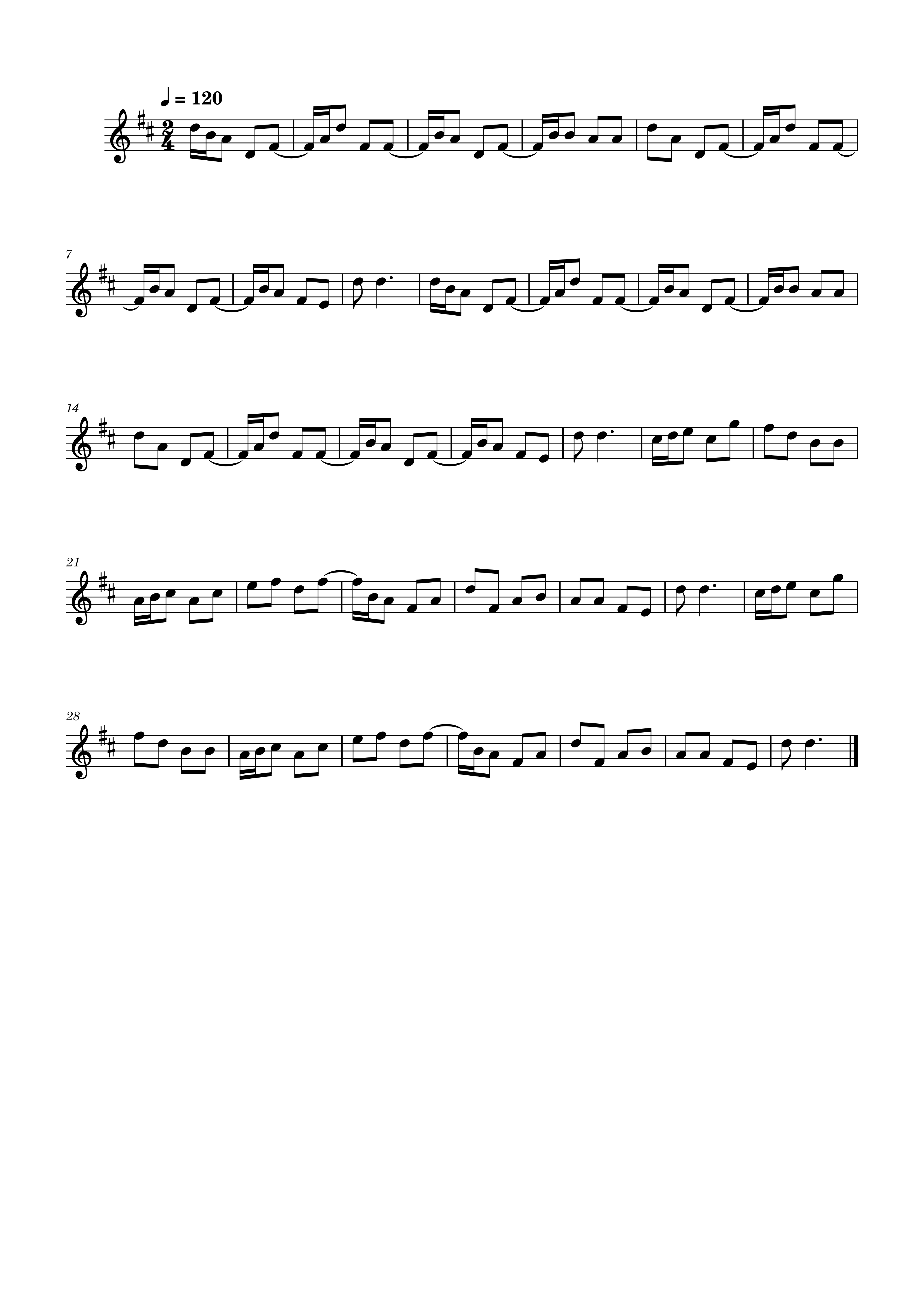

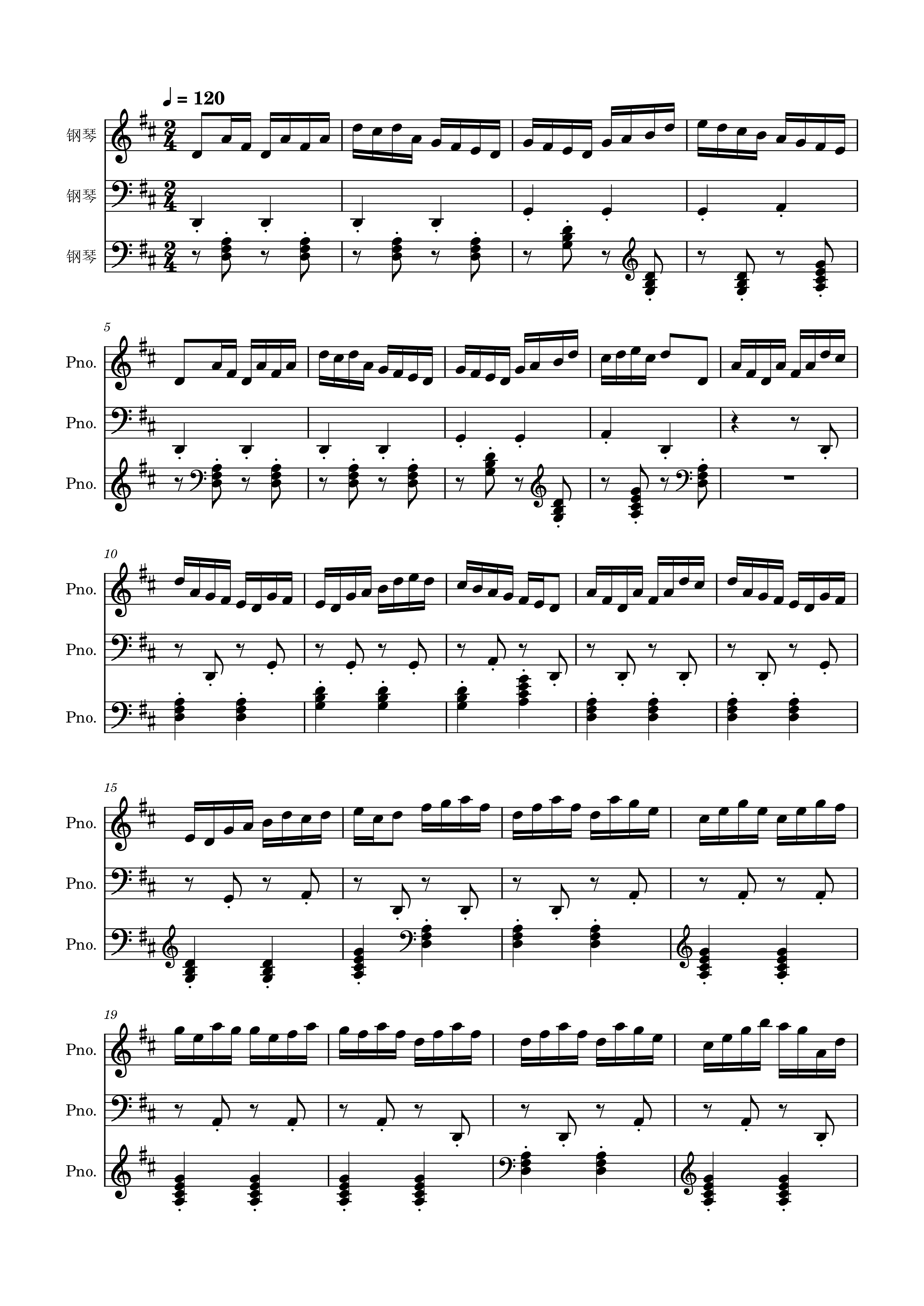

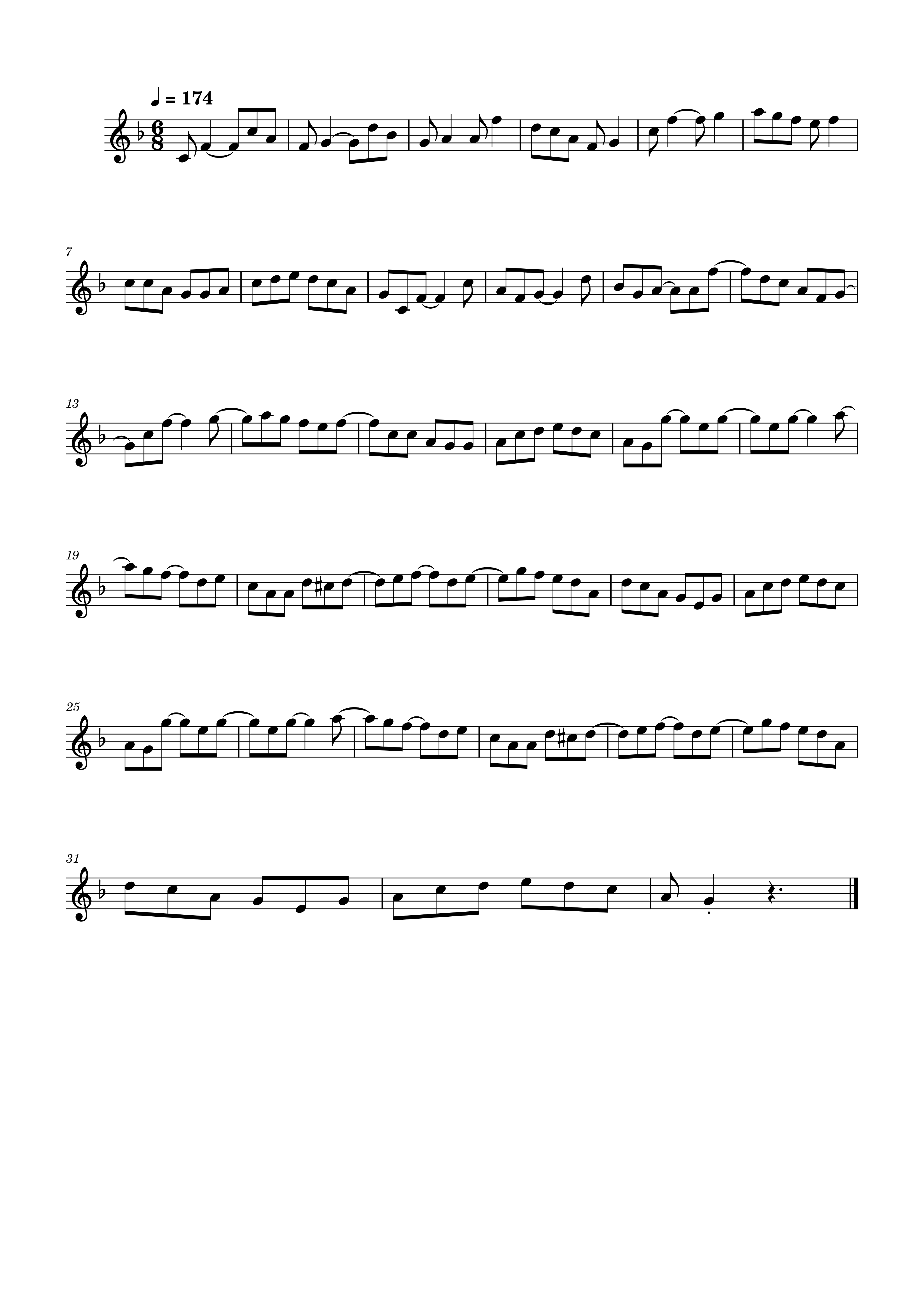

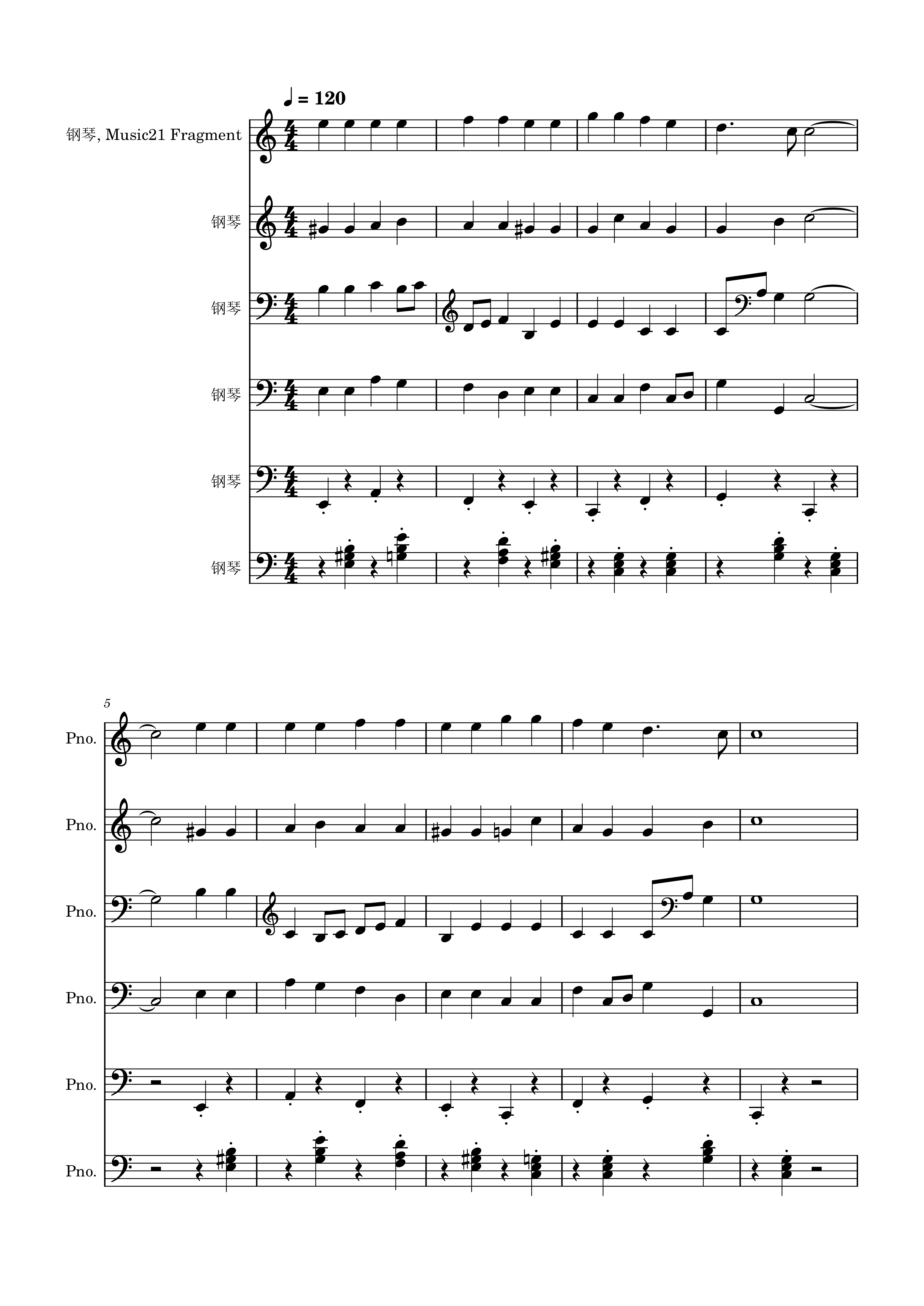

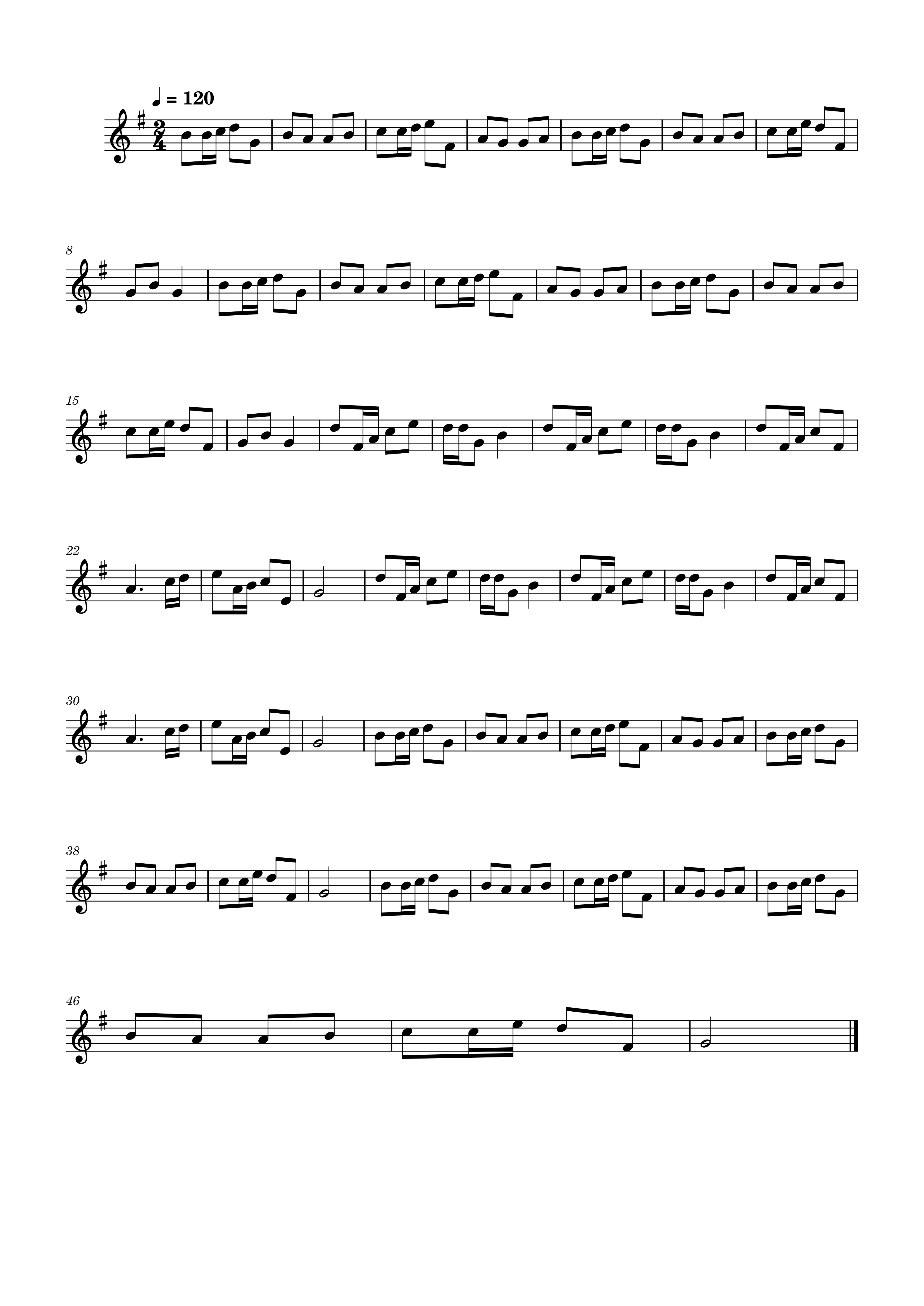

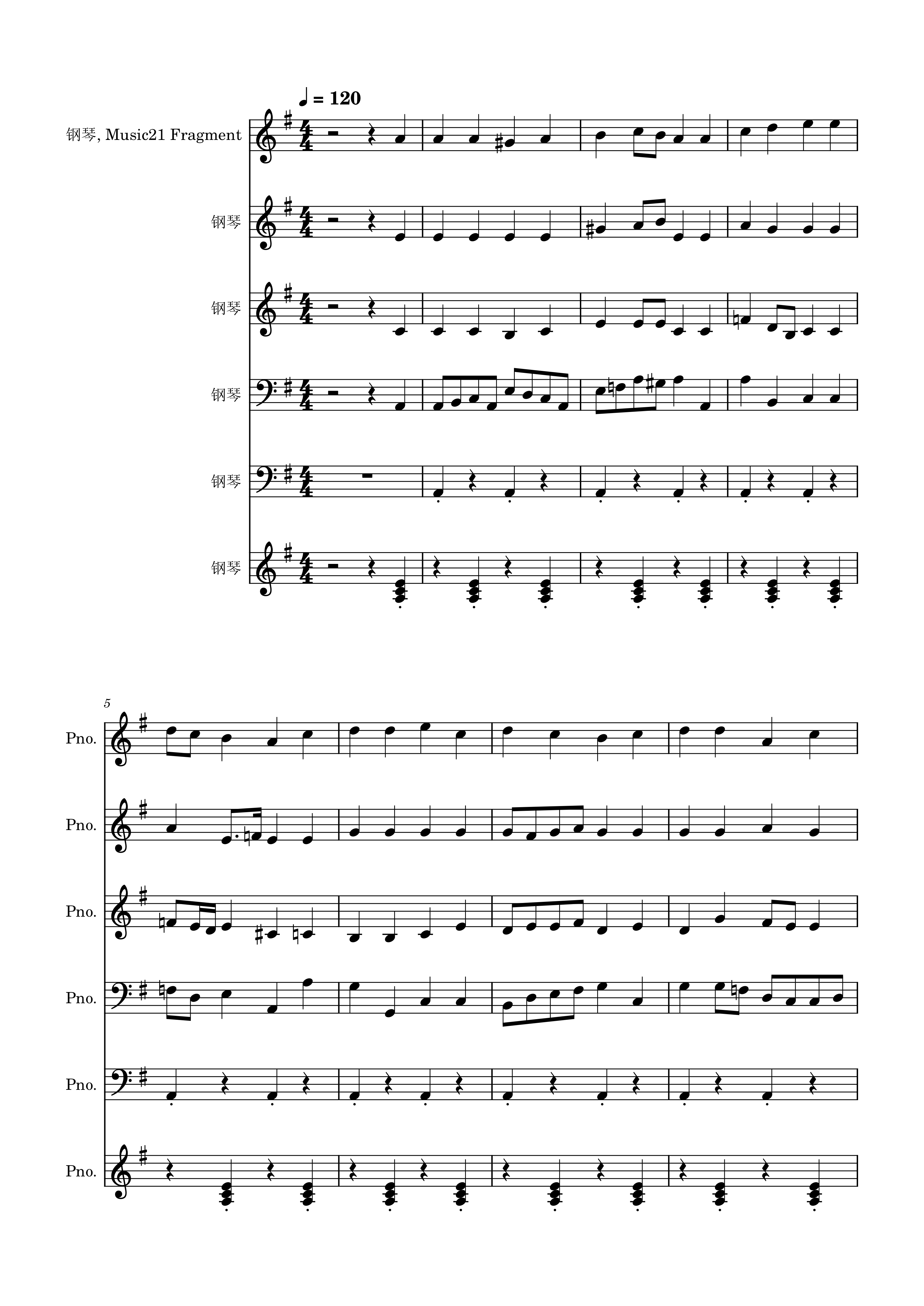

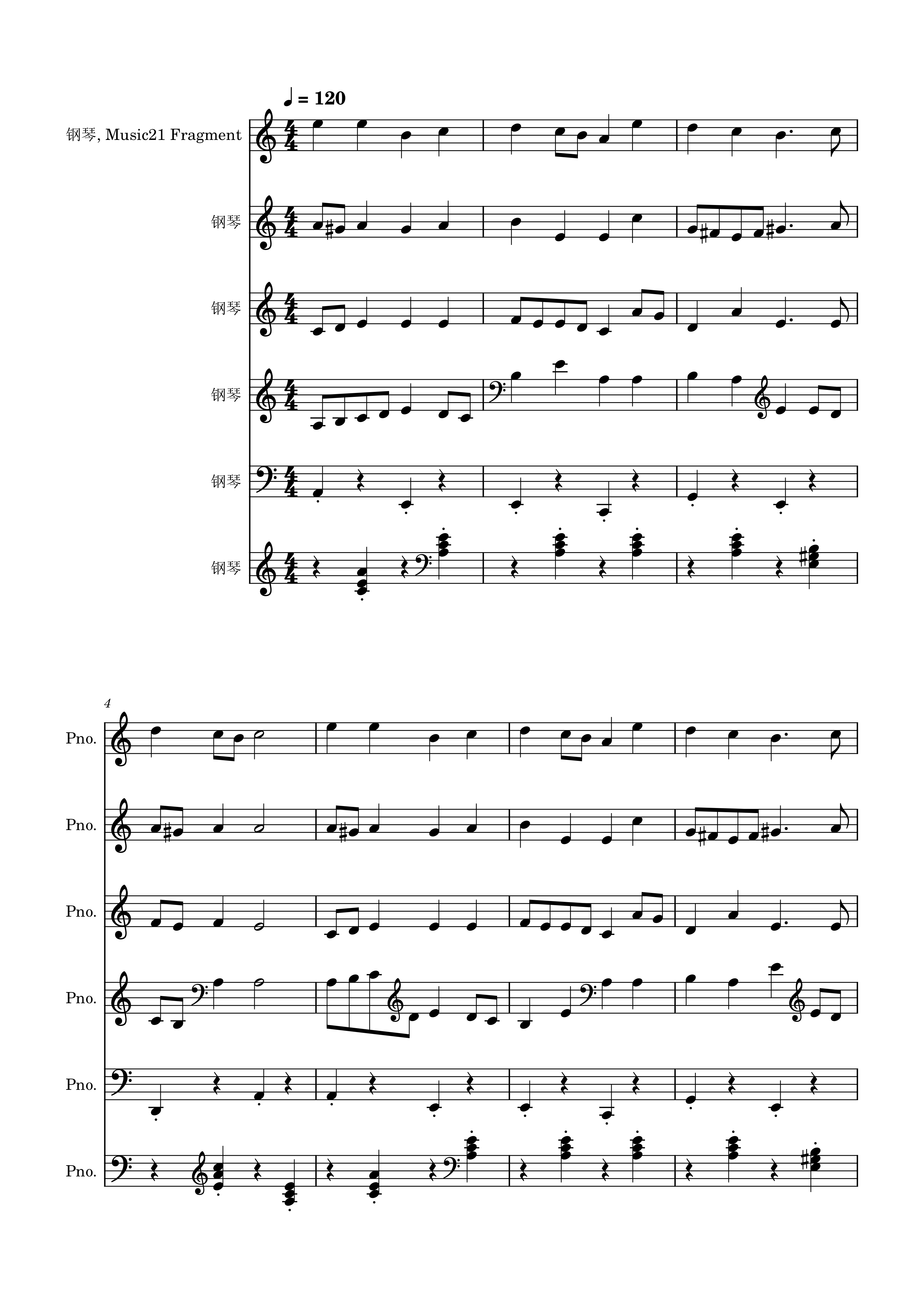

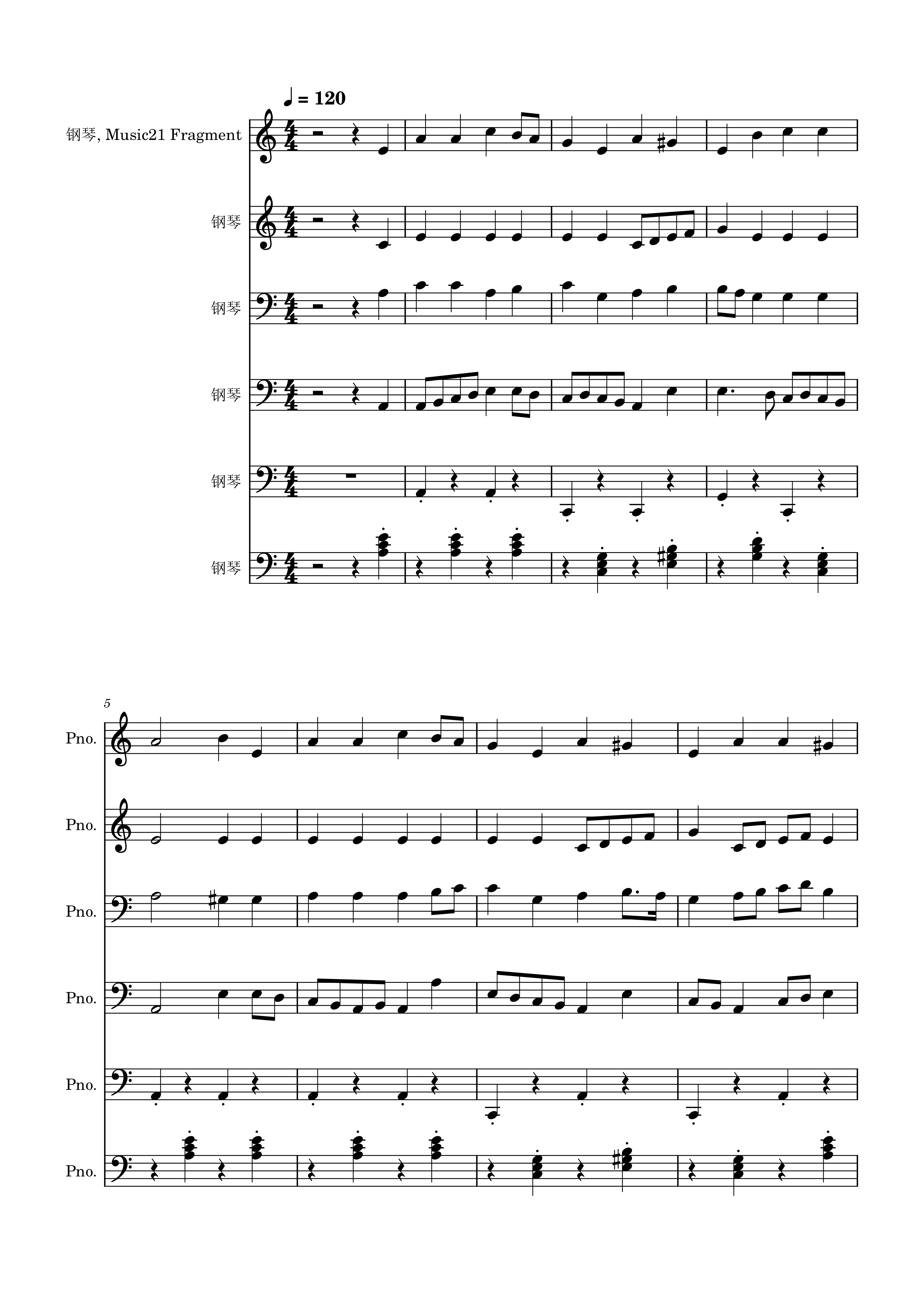

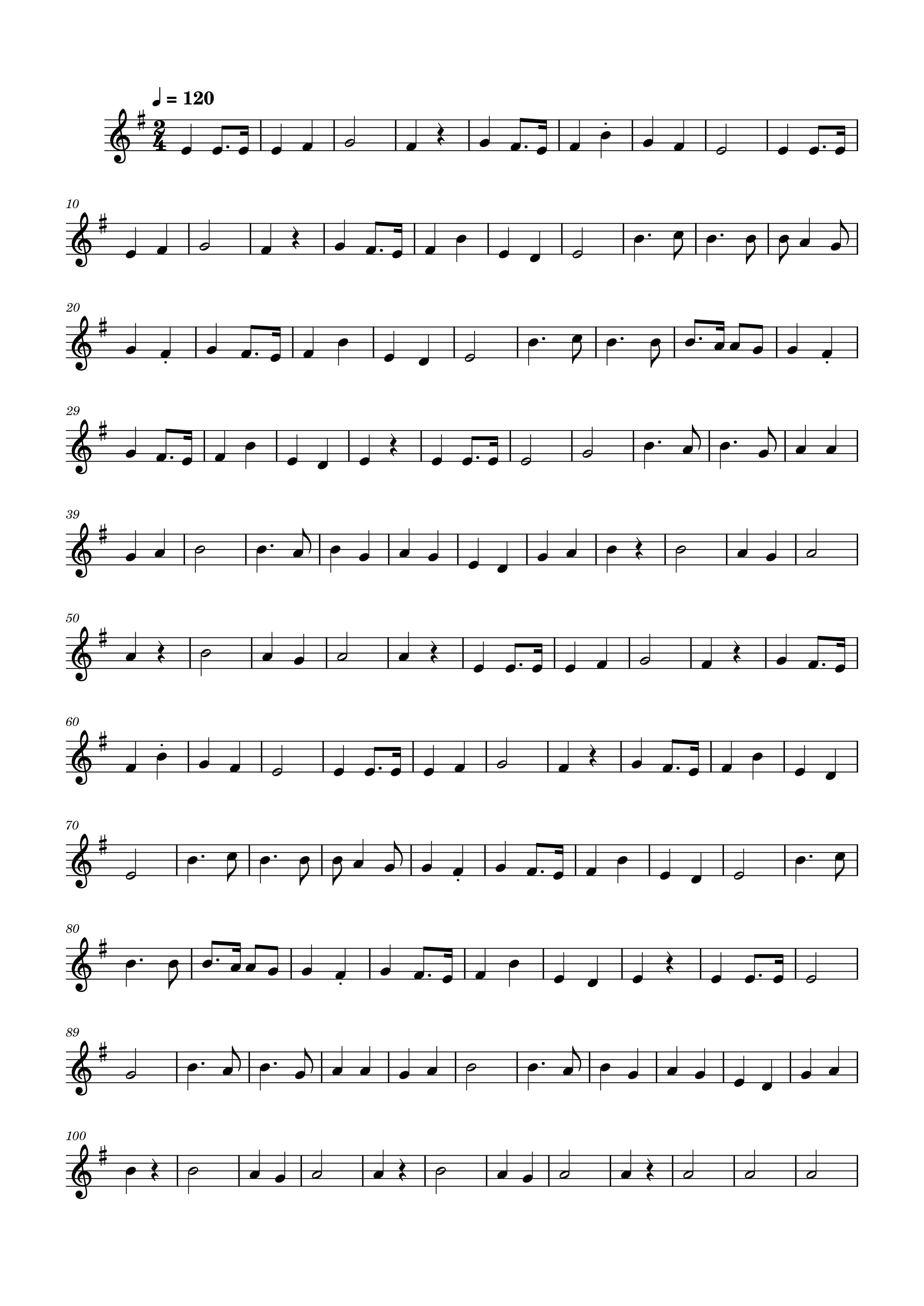

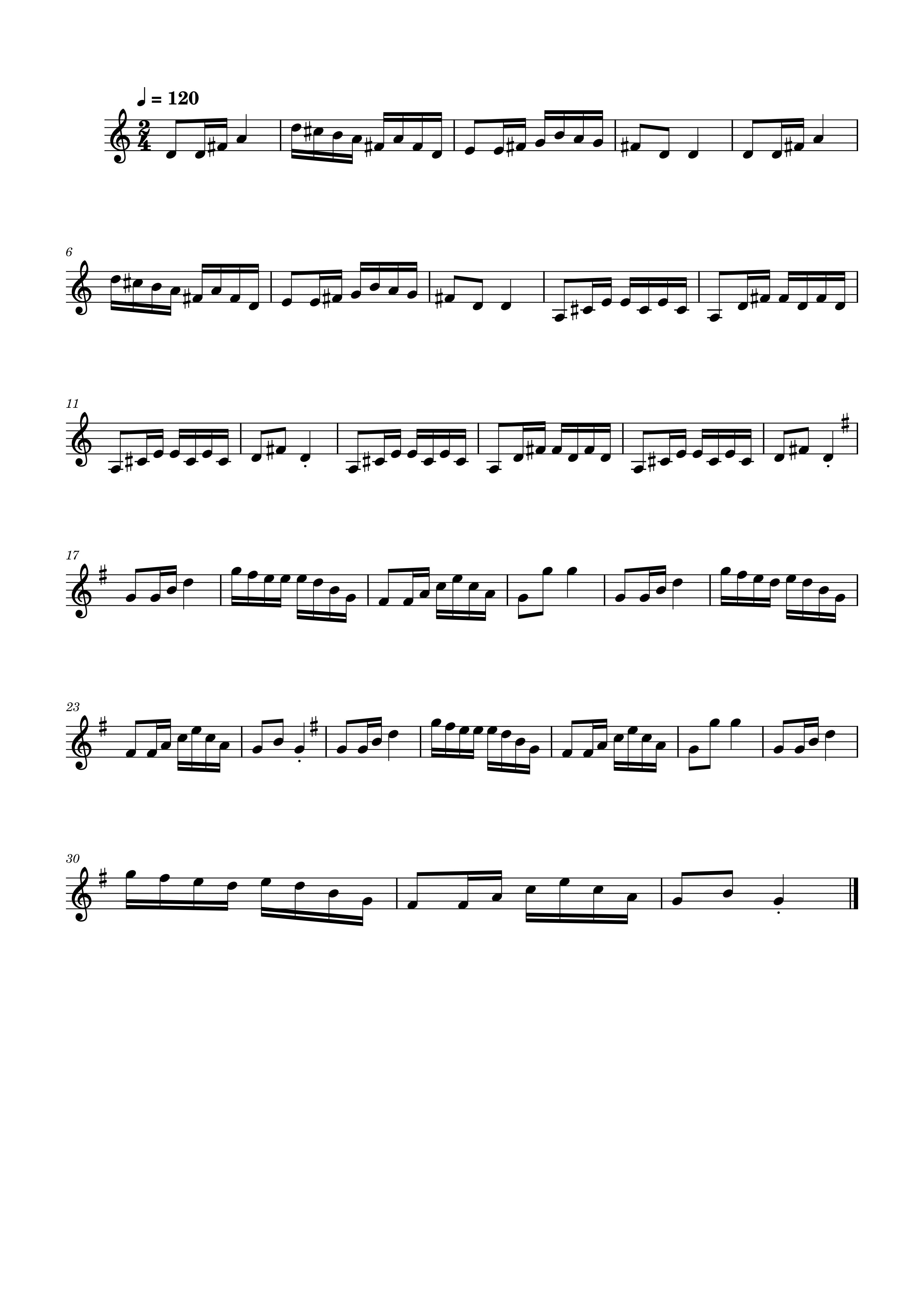

example 1

example 2

example 3

example 4

example 5

example 6

example 7

example 8

example 9

example 10

example 11

example 12

example 13

example 14

example 15

example 16

example 17

example 18

example 19

example 20